Hadoop developers are the most demanding career path in the IT sector. The demand of Hadoop developers grows exponentially every year due to the massive volume of data. In Naukri, it is stated that there are more than 6000 Hadoop developers required from various job locations this year. Due to the increasing demand for Hadoop developers, the requirement for skilled Hadoop developers remains questionable. So, if you are freshers looking to become a Hadoop developer, equipping skills would be the best choice. Now, every industry is looking for a candidate with skills relevant to the degree or job role they need to perceive.

Why must Hadoop developers be highly skilled? Because they are professional programmers and required to have a profound understanding of Hadoop core components, components of HDFS, big data components and the big data ecosystem. Moreover, they are responsible for designing, building and implementing the Hadoop application.

If you want to become a big data engineer, you can join Big Data Training in Chennai and learn the core concepts such as Hadoop architecture in big data,characteristics of Big Data- volume, velocity, variety, and veracity.

In this blog, we shall now discuss the core components of Hadoop, Hadoop ecosystem components, skills, and how to become Hadoop developers.

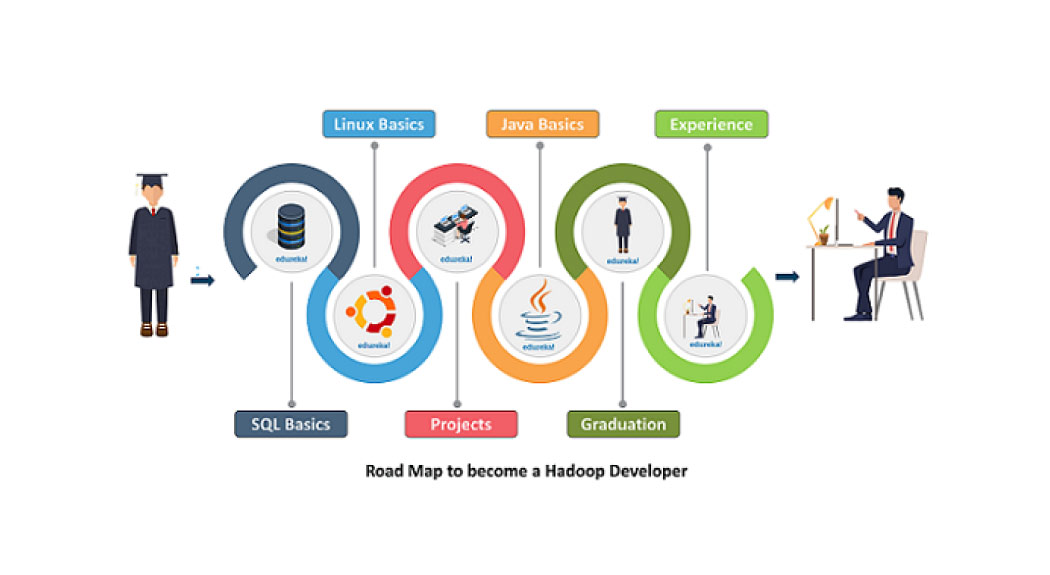

How to become a Hadoop Developer?

If you want to become a Hadoop developer, you have to acquire these skills:

-

Before taking the Hadoop Training Course, you must have any degree related to the IT industry. A bachelor's or a Master's Degree in Computer Science would be a plus.

-

If you want to become a Hadoop developer, you must have a basic understanding of SQL and Distributed systems.

-

Strong programming language skills such as Java, Python, JavaScript, NodeJS.

-

If you join the Hadoop training course, you will learn from basic to advanced Hadoop architecture in big data and other core concepts. So, after completion, you can build your project by executing techniques in practice.

-

Hadoop developers must have an in-depth understanding of Java.

To have an in- depth understanding of the Java programming language, you can join Java Training in Bangalore and learn the core concepts of Java, tools, libraries, frameworks, etc.

As we discussed how to become a Hadoop developer, now we shall look at the skills required to become a Hadoop developer.

Skills Required to Become a Hadoop Developer:

Hadoop developers must have skills in various technologies and be proficient in a programming language. The key concepts to becoming a Hadoop developer are detailed below.

-

Fundamental understanding of Hadoop ecosystem components and core components of Hadoop.

-

We must have the ability to work in various operating systems like Linux.

-

Comprehensive understanding of Hadoop Core components such as MapReduce, Hadoop Distributed File System, Yet Another Resource Negotiator and Common Utilities.

-

Must be familiar with Hadoop technologies such as Web Notebooks, Algorithms for Machine Learning, SQL on Hadoop, Databases, stream Processing Technologies, etc.

-

Moreover, be familiar with the ETL tools such as Apache Flume and Apache Sqoop, Apache HBase, Apache Hive, Apache Oozie, Apache Phoenix, Apache Pig, and Apache ZooKeeper.

-

Individuals must have Back-End Programming skills.

-

Good Knowledge of Scripting Languages and Query Languages like PigLatin, HiveQL, etc.

If you want to become a python developer, or Hadoop developer, you can join Python Training in Chennai and learn the core concepts of python coding, arrays, tools and libraries, etc.

What is Hadoop?

Hadoop is a software framework that processes the data applications executed in a distributed computing environment. Moreover, applications built using Apache Hadoop can be run on the massive amounts of data deployed among large clusters of machines.

Apache Hadoop is a free and open-source platform for storing and processing massive datasets ranging from gigabytes to petabytes. Rather than requiring a single huge computer to store and analyse the data, Hadoop allows multiple clustered computers to examine massive datasets in parallel.

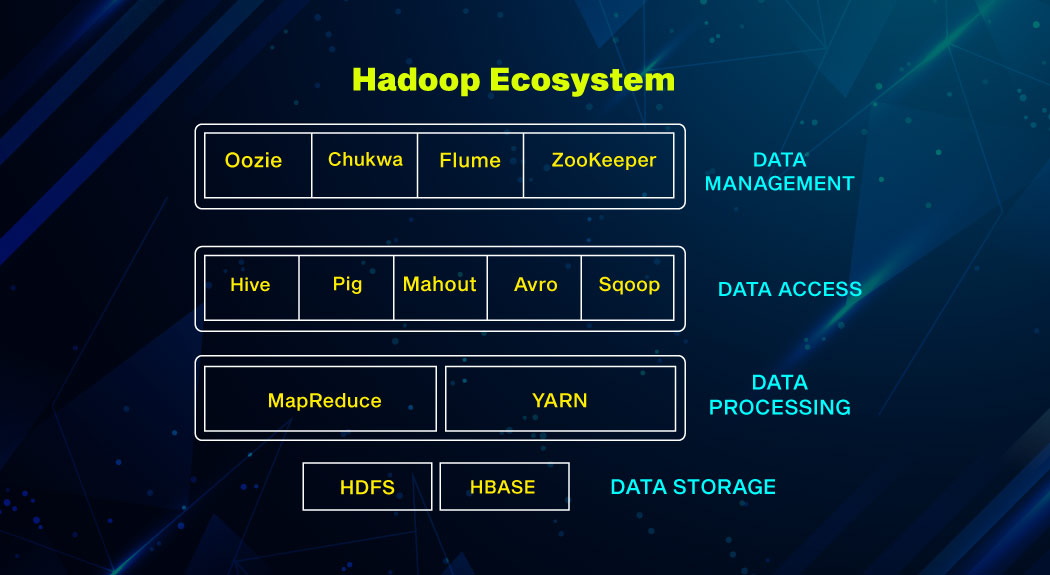

Hadoop Ecosystem

Hadoop Ecosystem is a platform that offers a wide range of services that address big data issues. It consists of Apache projects and a multitude of commercial tools and features.

Hadoop Distributed File System, MapReduce, Yet Another Resource Negotiator, and Hadoop Common are the four core components of Hadoop. Most of the tools are utilized to complement or support these key components. These technologies operate together to provide data absorption, processing, storage, and management.

The components of Hadoop ecosystem are listed below:

-

HDFS: Hadoop Distributed File System

-

YARN: Yet Another Resource Negotiator

-

MapReduce: Programming based Data Processing

-

Spark: In-Memory data processing

-

PIG, HIVE: Query-based processing of data services

-

HBase: NoSQL Database

-

Mahout, Spark MLLib: Machine Learning algorithm libraries

-

Solar, Lucene: Searching and Indexing

-

Zookeeper: Managing cluster

-

Oozie: Job Scheduling

All above mentioned are Hadoop ecosystem components. Now we shall discuss then in detail:

HDFS:

1. Name Node

2. Data Node

YARN:

-

YARN, or Yet Another Resource Negotiator, is a programme that assists in preventing resources across clusters. In a nutshell, it controls the Hadoop System's scheduling and resource allocation.

-

YARN has three significant components:

1. Resource Manager

2. Nodes Manager

3. Application Manager

MapReduce:

-

MapReduce allows us to carry over processing logic and helps develop programmes that turn large data sets into accessible ones by employing distributed and parallel methods.

-

MapReduce has two functions: Map() and Reduce().

-

Map() helps in sorting data and makes them as a group.

-

Reduce() combines the data that has been mapped.

PIG:

It's a platform for managing data flow, analyzing, and interpreting large amounts of data.

HIVE:

Apache Hive is a data warehouse that provides data queries and analysis on Apache Hadoop.

Mahout:

It has several libraries or functionalities, including collaborative filtering, grouping, and categorization, all of which are machine learning concepts. With the help of its libraries, it allows us to invoke algorithms based on our demands.

Apache Spark:

It consumes in-memory resources, thus being faster than the prior optimization.

Apache HBase:

It's a NoSQL database that can handle any data and handle everything from the Hadoop Database. It incorporates Google's BigTable features, allowing for efficient processing with large data sets.

Apart from all of them, a few more components play an essential role in enabling Hadoop to process enormous datasets. The following are the details:

-

Solr, Lucene

-

Zookeeper

-

Oozie

Join Python Training in Bangalore and learn python programming language concepts which is essential to become a hadoop developer.

Hadoop Architecture:

NameNode:

A NameNode illustrates every file and directory in the namespace.

DataNode:

DataNode allows you to interface with the blocks and control the status of an HDFS node.

MasterNode:

The MasterNode enables you to use Hadoop MapReduce to perform parallel data processing.

Slave node:

The SlaveNodes are additional Hadoop cluster servers that allow you to store data and perform intricate calculations.

Features of Hadoop:

Network Topology In Hadoop:

Topology affects the function of the Hadoop cluster whenever the Hadoop cluster increases. So, to control this, we shall use network topology. Most importantly, bandwidth is essential to consider while creating any network. However, measuring it isn't easy. In Hadoop, the network is demonstrated as a tree, and the distance between nodes of this tree is called a cluster.

The amount of network bandwidth available to process changes based on their location. That is, the available bandwidth decreases as we go away from-

-

On the same node, there are several processes.

-

On the same rack, there are different nodes.

-

Nodes in the same data centre's different racks.

-

Different data centres have other nodes.

Now, you would understand what Hadoop is, what Hadoop ecosystem components are, how to become a Hadoop developer, and the skills required to become a Hadoop developer. So, if you are interested in beginning your career in the IT industry as a Hadoop developer, you can join a Hadoop training course and learn the core components of Hadoop, Hadoop ecosystem components and Hadoop architecture in big data. If I want to become a Java developer, or intended to become a Hadoop developer, you can join Java Training in Chennai which would help to have a profound understanding of java coding.